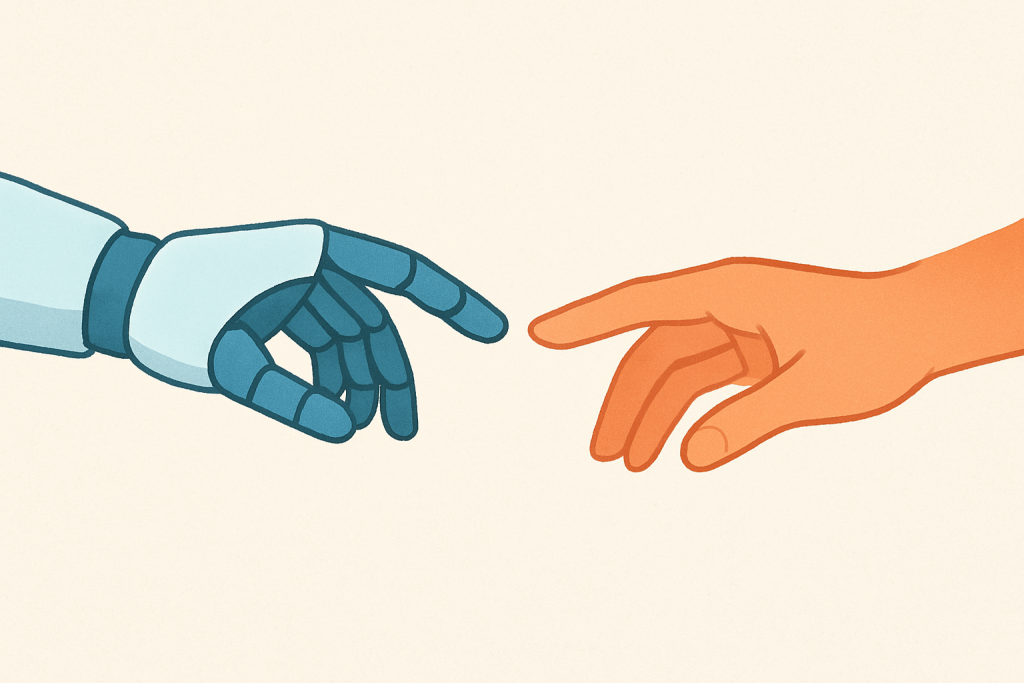

When we hear the word “handover,” we might think of a nurse passing on information at the end of a shift, or maybe a car switching from self-driving mode back to the driver. If you think more closely about it, you will see that handovers are everywhere around us — and that they are quietly shaping how we work, collaborate, and share responsibility with both people and technologies. In a recent publication, we discuss handovers with the goal to broaden how we think about them in the field of Human-Computer Interaction (HCI)—especially now that Generative AI (GenAI) is entering many workplaces. The paper is co-authored by Ece Üreten (University of Oulu), Rebecca Cort (Uppsala University), and Torkil Clemmensen (Copenhagen Business School).

Traditionally, HCI research has looked at handovers as the moment when control of a system, like a semi-autonomous car or a VR headset, passes from one person to another or from a machine to a human. However, handovers are more than just “passing the baton.” In today’s digital workplaces where AI, automation, and human collaboration blend, handovers are crucial moments of communication, coordination, and shared understanding. Accordingly, in this publication, we argue that handovers are more than technical events and should be viewed as complex socio-technical interactions involving people, technology, organizational structures, and tasks.

With GenAI tools becoming more widespread, the rules of handovers are changing. These tools can summarize data, generate content, and even adapt to different communication styles—all of which could make handovers smoother and more reliable. But GenAI also raises new questions such as: How should we design AI tools that understand the context of a handover? Can AI support empathy and human connection in teamwork? How do we make sure AI does not confuse, overwhelm, or mislead during critical transitions? To tackle these questions, we propose a research agenda focused on the following key dimensions:

1. Technology – What tools and formats make handovers effective?

2. Tasks – What exactly is being handed over, and under what conditions?

3. Actors – Who is involved? (It is not just humans anymore.)

4. Structure – How are handovers shaped by organizational rules and culture?

5. Cross-domain – How can we understand the distinctions of handovers across domains?

In this paper, we emphasize that handovers are not the same across domains and therefore, we call for cross-domain studies and more nuanced thinking about who (or what) is involved in these critical moment of communication, coordination, and shared understanding. The paper invites the HCI community to take handovers seriously, study them across different industries, and design future technologies with this human (and increasingly non-human) interaction in mind.

The full paper can be found here